The Oracle of Norwich

Introduction

Recently my sister took an interest in a scavenger hunt centered around the city of Norwich, England. The scavenger hunt consists of an Instagram account that posts riddles that point to a site in the city where a small dragon statuette has been hidden. It’s a cool idea and seems to have gathered a passionate following of riddle-solvers and scavenger hunters.

I live on the other side of the planet so I can’t participate, but I was intrigued with the idea of building a system that could automatically solve these riddles to see how close it could get to real answers. The obvious solution to solving these riddles automatically is to use a hosted large-language model such as ChatGPT. However, most of these riddles require extremely specific knowledge about Norwich. Although modern, general-purpose language models are surprisingly knowledgeable about geography and history, we need something capable of recalling incredibly obscure facts about locations in Norwich.

Recently, Retrieval-Augmented Generation (RAG) bots have picked up in popularity. The idea is to store a database of information and categorize it using something like an embeddings model. When a user wants to retrieve information, the embeddings model is ran against the query, the results are used to find matches in the database, and the user query along with the database results are provided to a language model. This allows language models to access more information than can easily be stored in the model itself. It also allows information to be continuously added or updated without retraining the language model.

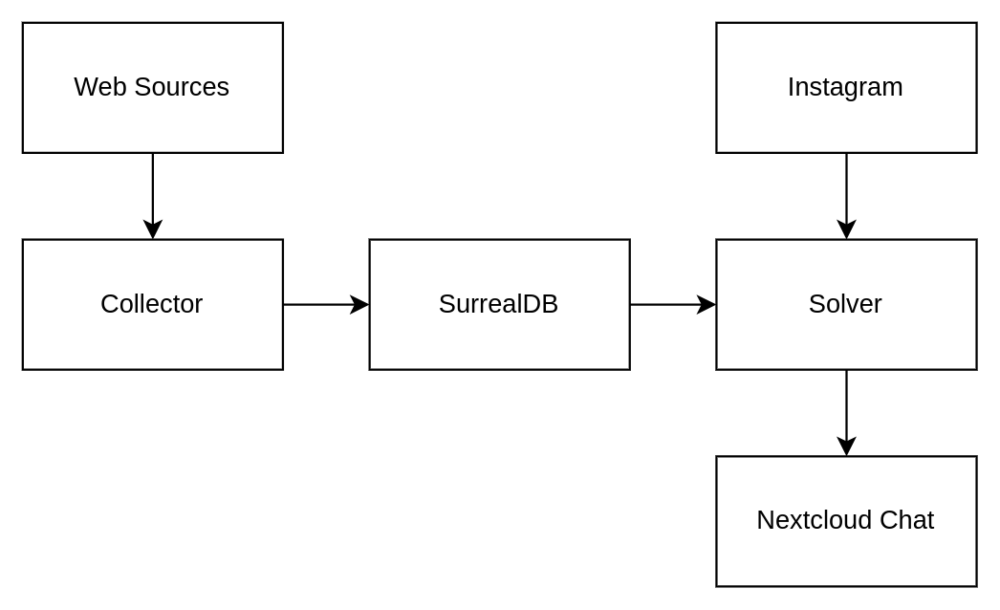

My idea was to build such a system dedicated to solving these Norwich riddles. I decided to use Python along with Textract and BeautifulSoup to webscrape as much information as possible about Norwich from public sources. I then used Rust and HuggingFace’s Candle framework to categorize the scraped data, retrieve relevant data for each riddle, and pass it to a small language model.

Architecture

Collector

Overview

The collector is a Python program that is solely responsible for scraping the web for relevant information about Norwich places. Python was chosen for this because it is incredibly easy to write and iterate on. It also has incredible libraries like BeautifulSoup which make parsing HTML intuitive.

Scraping

Growing up, Wikipedia was always demonized as a terrible, inaccurate source of content. We now know this just isn’t true. So the Norwich Wikipedia page is a good place to start web scraping. Far more valuable to us than the content itself though is the references list at the bottom of the page. At the time of writing, there is an impressive 236 sources referenced throughout the page. Not all of these sources are on the Internet, but a lot of them are. What if we wrote a web scraper that pulled all of the links here and downloaded the content from each?

We could apply this to other sites as well. For example, a sitemap for a website about Norwich. We could make the scraper go to each link and download the content rather than having to manually insert every page ourself into some huge list.

Let us define a list of tuples in Python:

RECURSIVE_PAGES = [

("https://en.wikipedia.org/wiki/Norwich", "mw-references-columns"),

("https://www.visitnorwich.co.uk/visiting-norwich/", "main-hero-multi-image"),

("https://www.visitnorfolk.co.uk/sitemap", "simple-sitemap-wrap"),

("https://www.norfolk-norwich.com/site-map.php", "container")

]

The first item in each tuple is the page of interesting links. However, not every link on the page is relevant. Every Wikipedia page has headers and footers with pages to “Create account”, “Log in”, “Donate”, or information about the page’s licensing, etc. None of this is relevant so the second item in the tuple defines the class of a parent div to the links we really want.

async def resolve_recursive_pages(db):

for (url, parent_div) in RECURSIVE_PAGES:

await insert_page(db, url)

response = requests.get(url)

soup = BeautifulSoup(response.content, "html.parser")

parent_div = soup.find("div", {"class": parent_div})

links = parent_div.find_all('a', href=True)

for link in links:

link = link["href"]

if link.startswith("#"):

continue

if link.lstrip().startswith("/"):

link = "/".join(url.split("/")[0:3]) + link

await insert_page(db, link)

Here we are using the requests library to get a page’s content and then BeautifulSoup to retrieve only the links inside of the div with the target class. All of these links then are stored in a SurrealDB table with another function called insert_page().

After we have a list of pages, we will iterate over all of them, parse over them with Textract or BeautifulSoup, and insert their paragraphs individually into the database.

async def download_paragraphs(db):

results = await db.query("SELECT id, url FROM pages WHERE crawled=false")

results = results[0]["result"]

for result in results:

page_id = result["id"]

url = result["url"]

response = requests.get(url)

# Parse PDFs wiwth textract and HTML with BeautifulSoup

if url.endswith(".pdf"):

pdf_path = "/tmp/pdf.pdf"

open(pdf_path, 'wb').write(response.content)

content = textract.process(pdf_path).decode('utf-8', 'ignore')

content = re.sub(r'(\n *\n)', '\n\n', content)

paragraphs = content.split("\n\n")

else:

soup = BeautifulSoup(response.content, "html.parser")

p_tags = soup.find_all("p")

for p_tag in p_tags:

paragraphs.append(p_tag.text)

for paragraph in paragraphs:

if len(paragraph) < 80:

continue

await insert_paragraph(db, page_id, paragraph, paragraph_index)

paragraph_index = paragraph_index + 1

Solver

Overview

The solver is our Rust application and it will do all the heavy lifting. It will go to the Instagram page to find new riddles, get context using the paragraphs in the database, and attempt to solve them with a language model.

Automatically Retrieving Riddles

We can’t be manually copying riddles from Instagram and pasting them into the Solver, that would be super lame!

I created a new Chrome user data directory specifically for this project by launching Chrome with the --user-data-dir flag. I then manually logged into Instagram so I could get through whatever login protections I needed to. Now I have a valid user data directory that has a valid Instagram session.

We can now use the Headless Chrome Rust library.

let browser = Browser::new(

LaunchOptions::default_builder()

.user_data_dir(Some(user_data_dir))

.headless(true)

.disable_default_args(true)

.build()

.expect("Could not find chrome-executable"),

).unwrap();

let tab = browser.new_tab().unwrap();

tab.navigate_to("https://www.instagram.com/hiddendragonsnorfolk/").unwrap();

tab.wait_until_navigated();

// Click on latest

tab.wait_for_xpath(concat!(

"/html/body/div[2]/div/div/div[2]/div/div/div[1]/div[2]/div/div[1]/section/main/div",

"/div[2]/div/div[1]/div[1]/a/div[3]"

))

.unwrap()

.click();

// Get description

let element = tab.wait_for_xpath(concat!(

"html/body/div[7]/div[1]/div/div[3]/div/div/div/div/div[2]/div/article/div/div[2]/div/",

"div/div[2]/div[1]/ul/div[1]/li/div/div/div[2]/div[1]"

))

.unwrap();

We now have a way to fetch the latest Instagram post and get the description for it. We will use basic heuristics detections like checking line lengths to determine if it is a riddle, and then we will use a new SurrealDB table to track them so we know when a riddle is new or has already been processed.

Context

From the Collector, we have a database of paragraphs that will hopefully have good information about places in Norwich. Now we need a way to find relevant paragraphs from the riddles. For this we will use BAAI’s General English Embedding Model 1.5 (BGE). Vector embedding models like this one can be used to generate vectors from text. The nearness of these vectors to each other allow us to determine the similarity between different texts.

For our purposes, we will iterate over every single paragraph in the database, generate a vector using BGE, and then update the paragraph’s database row with that vector.

let query = "SELECT id, content FROM paragraphs WHERE embeddings=[];";

let results = self.db.query(query).await;

let paragraphs_ids:Vec<Thing> = results.unwrap().take("id").unwrap();

for paragraph_id in paragraphs_ids {

let query = "SELECT content FROM $id";

let results = self.db.query(query).bind(("id", ¶graph_id)).await;

let content: Option<String> = results.unwrap().take("content").unwrap();

if content.is_none() {

continue;

}

let embeddings = self.embeddings_model.get_embeddings(&content.unwrap()).await;

if embeddings.is_err() {

println!("ContextEngine: Error getting embeddings for {}", ¶graph_id);

continue;

}

let embeddings = embeddings.unwrap();

let query = "UPDATE $id SET embeddings=$embeddings";

self.db.query(query).bind(("id", paragraph_id)).bind(("embeddings", embeddings)).await;

}

Later, when a new riddle is found we will generate a vector for each line in the riddle. We’ll take those vectors and use SurrealDB’s vector::similarity::cosine function to find the most similar paragraphs based on their vector’s similarity to the riddle line.

let mut combined_paragraphs = String::new();

for line in riddle.split("\n") {

let query_embeddings = self

.embeddings_model.get_embeddings(&String::from(line)).await.unwrap();

let mut response = self.db

.query("SELECT content, vector::similarity::cosine(embeddings, $query_embeddings) \

AS score \

FROM paragraphs \

WHERE embeddings != [] \

ORDER BY score DESC LIMIT 3")

.bind(("query_embeddings", query_embeddings))

.await

.unwrap();

let context_pargraphs: Vec<String> = response.take("content").unwrap();

for context_paragraph in context_pargraphs {

combined_paragraphs.push_str(&context_paragraph.split_whitespace().collect::<Vec<_>>().join(" "));

combined_paragraphs.push_str("\n\n");

}

}

Using a general embedding model to find similarities between abstract riddle lines and paragraphs about locations is one of the weakest areas of this project and has the most room for improvement.

Passing Everything to a Language Model

We have a way to retrieve riddles and get context for them, now all that’s left to do is pass it all to a language model. In this case we will use Microsoft’s Phi-3 Instruct model. Although it is a small language model, its ability to reason and perform abstract thinking is quite impressive. Thankfully, Microsoft has guides on using it with the HuggingFace Candle framework.

Lets take the context along with the riddle and create a simple prompt from it, following Microsoft’s instruction format:

let prompt = format!(

concat!(

"<|system|>\nYou solve riddles using this context: {}\n",

"<|end|>\n",

"<|user|>\nSolve this riddle: {}\n",

"<|end|>\n",

"<|assistant|>"

),

context,

riddle

);

let result = language_model.run(prompt).await;

Getting the Output

My favorite way for getting push notifications from programs is Nextcloud Chat. It has an easy API to interact with and allows me to get instant notifications from my phone without paying for Twilio messages or similar.

let client = reqwest::Client::new();

let domain = env::var("NC_DOMAIN").unwrap();

let chat_id = env::var("NC_CHAT_ID").unwrap();

let username = env::var("NC_USERNAME").unwrap();

let password = env::var("NC_PASSWORD").unwrap();

let mut body = HashMap::new();

body.insert("token", chat_id.clone());

body.insert("message", message.clone());

let res = client.post(format!("{}/ocs/v2.php/apps/spreed/api/v1/chat/{}", domain, chat_id))

.json(&body)

.header("Content-Type", "application/json")

.header("Accept", "application/json")

.header("OCS-APIRequest", "true")

.basic_auth(username, Some(password))

.send()

.await

.unwrap();

Results

I have been running the program for several days now. The most impressive result:

Riddle: In quiet stone her memory keeps,

A healer’s rest where the city sleeps.

In sight of spires that scrape the sky,

A martyr’s name will never die.

Where courage stood in face of fear,

Her tale endures—forever near.

The riddle describes a location with historical significance, likely related to an individual who was martyred and is remembered for their courage. Given the context of Norwich, it seems plausible that this refers to Nurse Edith Cavell’s grave at Norwich Cathedral.

I later found out this was the correct answer. However, only one paragraph of useful context was provided so this appears to be Phi-3 simply having enough knowledge to solve the riddle entirely on its own.

The worst answers are ones where the context paragraphs provide the wrong location and Phi-3 goes to great lengths to convince you it is indeed the correct location:

Riddle: I sit in a tide of weeds, stone arches shielding me,

Beside a farm where no beasts roam free.

Downhill from a tower with frozen time,

Golden bird in sight, high he climbs

Where wizards’ marks on skin are cast,

I guard my secrets, hidden in the past.

The answer to this riddle is Blickling Hall, a historic estate in Norfolk, England.

Blickling Hall sits within the grounds of Blickling Estate, which features an extensive collection of gardens and woodland areas. Among these are stone arches that provide shelter from the elements, creating pockets of tranquility amidst the natural surrayering weeds. The farm at Blickling Estates has been known for its commitment to organic farming practices, ensuring no animals roam freely as they adhere to strict animal welfare standards.

The tower mentioned could refer to the iconic Blickling Tower, part of the estate’s historic architecture. It is a Grade I listed building with intricate details and ornamentation, often associated with stories or legends due to its age and grandeur.

The “golden bird in sight” likely refers to the golden eagle that can be seen soaring above the grounds from time to time. These majestic birds are not native to England but have been introduced to some estates for conservation purposes.

Sometimes the answer is just clearly not correct:

Riddle: Not near a lock, but near the side.

I hear oinking on the tide.

A caged blood sucker covers me

Can a hunter set me free?

The answer to this riddle is a “sea lion.”

The moral of the story here is that a RAG bot is probably not the best way to solve riddles focusing on a specific area of knowledge. It would be better to create a fine-tune of a language model with all the data you are able to web scrape so that the language model is able to make the associations internally without having to rely on an embeddings model which is inferior at abstract connections.